Kube-Hetzner

A highly optimized and auto-upgradable, HA-able & Load-Balanced, Kubernetes cluster powered by k3s-on-k3os deployed for peanuts on Hetzner Cloud 🤑 🚀

About The Project

Hetzner Cloud is a good cloud provider that offers very affordable prices for cloud instances, with data center locations in both Europe and the US. The goal of this project was to create an optimal and highly optimized Kubernetes installation that is easily maintained, secure, and automatically upgrades itself. We aimed for functionality as close as possible to GKE's auto-pilot.

Please note that we are not affiliated to Hetzner, this is just an open source project striving to be an optimal solution for deploying and maintaining Kubernetes on Hetzner Cloud.

Features

- Lightweight and resource-efficient Kubernetes powered by k3s on k3os nodes.

- Automatic HA with the default setting of two control-plane and agents nodes.

- Automatic Traefik ingress controller attached to a hetzner load balancer with proxy protocol turned on.

- Add or remove as many nodes as you want while the cluster stays running (just change the number instances and run terraform apply again).

It uses Terraform to deploy as it's easy to use, and Hetzner provides a great Hetzner Terraform Provider.

Getting started

Follow those simple steps, and your world's cheapest Kube cluster will be up and running in no time.

Prerequisites

First and foremost, you need to have a Hetzner Cloud account. You can sign up for free here.

Then you'll need you have the terraform, helm, and kubectl cli installed. The easiest way is to use the gofish package manager to install them.

gofish install terraform && gofish install kubectl && gofish install helm

⚠️ [Essential step, do not skip] Creating the terraform.tfvars file

- Create a project in your Hetzner Cloud Console, and go to Security > API Tokens of that project to grab the API key. Take note of the key! ✅

- Generate an ssh key pair for your cluster, unless you already have one that you'd like to use. Take note of the respective paths! ✅

- Rename terraform.tfvars.example to terraform.tfvars, and replace the values from steps 1 and 2. ✅

Customize other variables (Optional)

The number of control plane nodes and worker nodes, the Hetzner datacenter location (.i.e. ngb1, fsn1, hel1 ...etc.), and the Hetzner server types (i.e. cpx31, cpx41 ...etc.) can be customized by adding the corresponding variables to your newly created terraform.tfvars file.

See the default values in the variables.tf file, they correspond to (you can copy-paste and customize):

servers_num = 2

agents_num = 2

location = "fsn1"

agent_server_type = "cpx21"

control_plane_server_type = "cpx11"

lb_server_type = "lb11"

Installation

terraform init

terraform apply -auto-approve

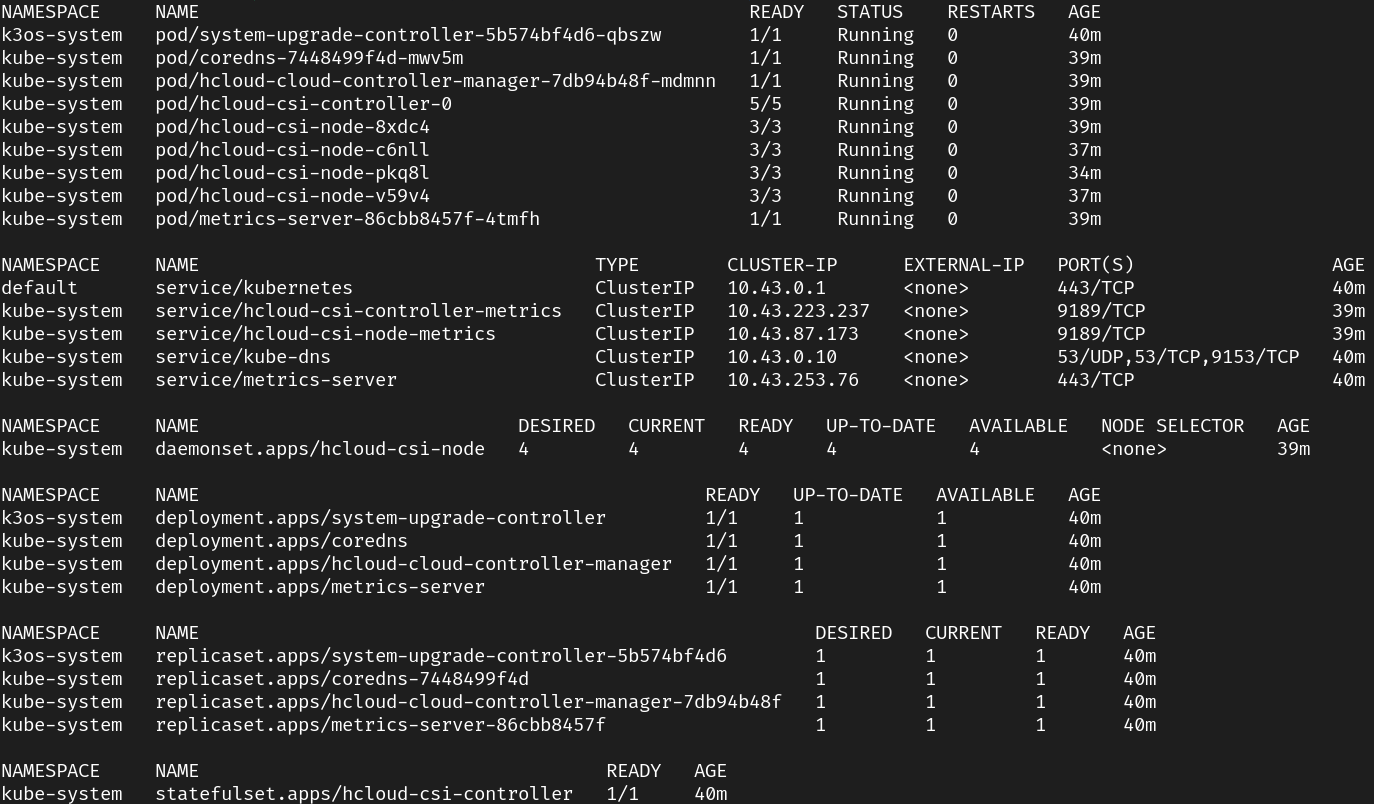

It will take a few minutes to complete, and then you should see a green output with the IP addresses of the nodes. Then you can immediately kubectl into it (using the kubeconfig.yaml saved to the project's directory after the install).

Just using the command kubectl --kubeconfig kubeconfig.yaml would work, but for more convenience, either create a symlink from ~/.kube/config to kubeconfig.yaml, or add an export statement to your ~/.bashrc or ~/.zshrc file, as follows:

export KUBECONFIG=/<path-to>/kubeconfig.yaml

Of course, to get the path, you could use the pwd command.

Scaling Nodes

You can scale the number of nodes up and down without any issues or even disruption! Just add or edit these variables in terraform.tfvars and re-apply terraform.

servers_num = 2

agents_num = 3

Usage

When the cluster is up and running, you can do whatever you wish with it. Enjoy! 🎉

Useful commands

- List your nodes IPs, with either of those:

terraform outputs

hcloud server list

- See the Hetzner network config:

hcloud network describe k3s-net

- Log into one of your nodes (replace the location of your private key if needed):

ssh rancher@xxx.xxx.xxx.xxx -i ~/.ssh/id_ed25519 -o StrictHostKeyChecking=no

Automatic upgrade

By default, k3os and its embedded k3s instance get upgraded automatically on each node, thanks to its embedded system upgrade controller. If you wish to turn that feature off, please remove the following label k3os.io/upgrade=latest with the following command:

kubectl label node <nodename> 'k3os.io/upgrade'- --kubeconfig kubeconfig.yaml

As for the Hetzner CCM and CSI, their container images are set to latest and with and imagePullPolicy of "Always". This means that when the nodes upgrade, they will be automatically upgraded too.

Takedown

Then you can proceed to take down the rest of the cluster with:

kubectl delete -k hetzer/csi --kubeconfig kubeconfig.yaml

kubectl delete -k hetzer/ccm --kubeconfig kubeconfig.yaml

hcloud load-balancer delete traefik

terraform destroy -auto-approve

Also, if you had a full-blown cluster in use, it would be best to delete the whole project in your Hetzner account directly as operators or deployments may create other resources during regular operation.

Roadmap

See the open issues for a list of proposed features (and known issues).

Contributing

Any contributions you make are greatly appreciated.

- Fork the Project

- Create your Branch (

git checkout -b AmazingFeature) - Commit your Changes (

git commit -m 'Add some AmazingFeature') - Push to the Branch (

git push origin AmazingFeature) - Open a Pull Request

License

The following code is distributed as-is and under the MIT License. See LICENSE for more information.

Contributors

- Karim Naufal - @mysticaltech

Acknowledgements

- k-andy was the starting point for this project. It wouldn't have been possible without it.

- Best-README-Template that made writing this readme a lot easier.

- k3os-hetzner was the inspiration for the k3os installation method.

- Hetzner Cloud for providing a solid infrastructure and terraform package.

- Hashicorp for the amazing terraform framework that makes all the magic happen.

- Rancher for k3s and k3os, robust and innovative technologies that are the very core engine of this project.